Introduction:

Since the beginning of the Internet, when people started visiting websites and (hopefully) buying stuff online, businesses have wanted to know exactly what people were doing in their virtual web storefronts. From the humble page view counter, to cookies and tracking tools, the industry of web analytics was born.

Today, Web Analytics is a well established tool for obtaining knowledge and insights of the vistors to our websites by tracking the behavior of visitors as they click through from page to page. The demand for Web Analytics is growing, with an estimated current value of US $600 million and growing in the double digits every year according to a number of online sources (see 2009 study by Forrester Research). Web Analytics comes in a wide variety of solutions, ranging from simple modules that plug into full-blown Business Intelligence suites, to Cloud-based service-oriented analytics tools.

Your humble Roadchimp was recently given the task of advising a close friend and entrepreneur on devising a Web Analytics solution for a growing online business. And so I decided it would be an excellent opportunity to develop a simple primer for our lovely primate readers.

Further Reading:

Rather than going into too much detail about Web Analytics, I’m providing a quick link to the Wikipedia page that contains a great overview of the topic. You’re one quick click away from obtaining some basic definitions of commonly used terms as well as links to some of the more popular Web Analytics platforms out in the market.

Scenario:

SwingFromLimb (a.k.a. Swing) is a rapidly growing online social tool that helps active-minded chimps to find sports facilities closer to them. Swing’s customers come from all walks of life and predominantly are primates who are interested in sports activities of all types, from Tree Canopy Judo to Prehensile Yoga and Banana Kickboxing. Swing connects activity enthusiasts to sports facility owners by listing thousands of classes online that users sign up for via the Swing website or App. As part of it’s enhanced service offering, Swing offers a club management application to facility managers to help them market their classes to online users.

Goals:

Swing wants to analyze the online behavior of its users in order to identify useful information, such as the types of classes that are more popular in a particular part of the jungle, the times of day that chimps book their classes online as well as seasonal variations in class attendance. This can happen especially in the New Year when holy Saint Chimpolous rides up from the tropics in his banana sled and slides down our chimneys to deliver overripe fruit while consuming all of our household cleaners; and the ensuing hordes of soft-bellied and guilt-ridden chimps making a beeline for their nearest gym after the holidays. (yes… serious readers I wrote a funny)

Details that the Swing management would like to obtain about their customers are listed below:

- Methods of Accessing Content: The types of web browser, screen resolution, language and plug-in support (Java, Flash etc) used to access the site or App.

- Location of Visitors: Using IP Address filtering and location awareness, we can determine which geographical locations users are connecting to the website from as well as which mobile service providers they may be using.

- In-Site Behavior: Which pages users click to most frequently; how long users tend to dwell on a page before clicking to the next page and also what pages users visit last before leaving the site.

- Access Patterns: Access behavior for each user based on criteria such as the time of day; geographical location (home or office) and days of the week.

- Web Referrals: Which search engines, blogs or websites are users referred to when entering the website.

Google Analytics:

Our task is develop a prototype Analytics solution for Swing to allow management to get an idea of what a common Web Analytics solution can offer. After this evaluation phase, we can provide a recommendation on the most cost effective and scalable solution for the company. Looking at the widespread number of tools out there, we’ve decided to start with Google Analytics.

This is a free-to-use service provided by Google and at last count is the analytics platform of choice on over 17 million web sites. Google Analytics was originally developed by the Urchin Software Corporation which was acquired by Google in 2005. The product is delivered as a freemium service and features integration with Google AdWords and requires a number of cookies to be deployed on the end user’s computer.

Implementing Google Analytics

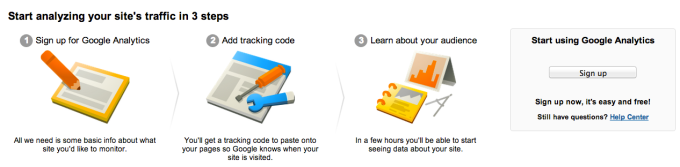

To successfully implement a web analytics platform, the company recommends a simple 3-step process on the Google Analytics website as follows:

- Sign up for Google Analytics

- Add tracking code

- Learn about your audience

We shall cover these steps in detail below:

1. Sign up for Google Analytics

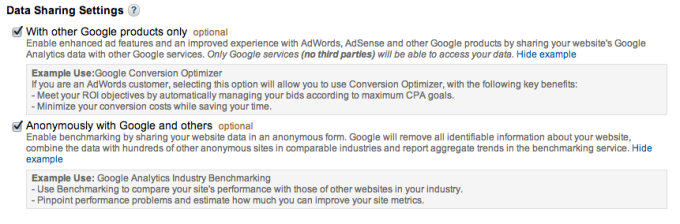

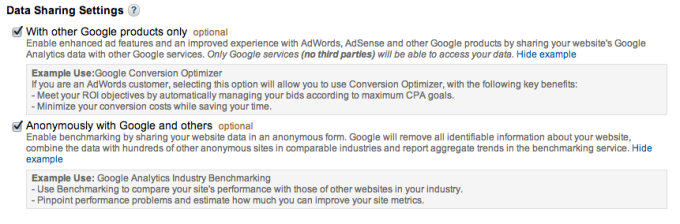

The first step involves signing in with a Google Analytics account (If you don’t have a Google account already) and putting in some of your website’s details. One interesting note is that you have a choice of anonymizing your data and sharing it with other users and also on whether to enable integration with other Google services.

Prior to signing up for the service, you have to agree to the Google Analytics Terms of Service, which among other things, declares that this is a free service for up to 10 million hits.

2. Add Tracking Code

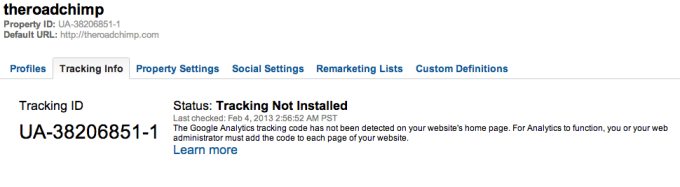

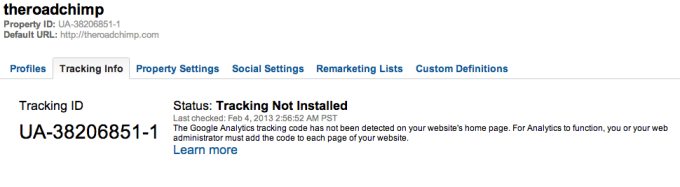

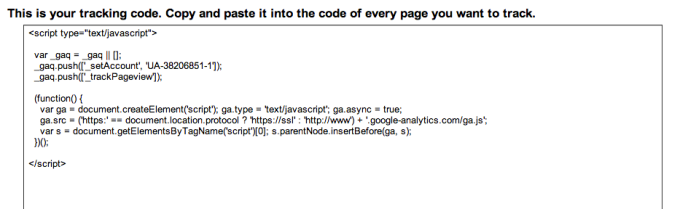

Once you have completed the initial setup, a unique tracking code is generated for your website and you are directed to the Google Analytics dashboard where you can perform some basic configuration settings, as well as obtain a tracking code that will need to be inserted into the HTML code of your website.

The tracking code is basically a string of text and looks like this:

The tracking code can be implemented in three different ways

- Static Implementation: The HTML code is copied and inserted into the header of each HTML page.

- PHP implementation: You create a file called analyticstracking.php that contains the tracking code block. This page is included in each PHP template page

- Dynamic Content Implementation: In order to implement Google Analytics, we can follow the basic process listed above, and reference our code block through an include or template reference when each page is called by a browser.

That’s the hardest part of the work done, since there are no specific firewall requirements to configure on your servers as it is your website’s visitors end devices that connect to the Google App servers.

The following web URLs are used to pass page statistics to Google’s servers.

https://ssl.google-analytics.com/__utm.gif

http://www.google-analytics.com/ga.js

http://ssl.google-analytics.com/ga.js

3. Learn about your audience

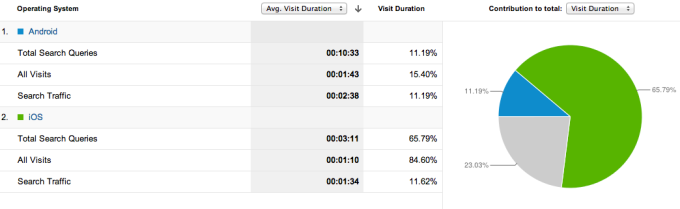

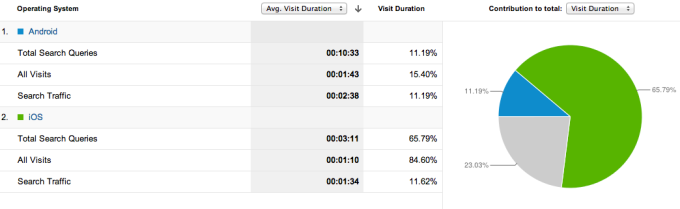

This next step is all about implementation and once the tracking code is configured, we can start to analyze data about our users. A quick login to the dashboard shows us interesting data about users and we have the ability to create customizable reports of a multitude of things including the amount of time that Android users versus iPhone users spent on the site.

So how exactly does Google Analytics work? Once we’ve configured Swing’s web pages to serve up the tracking code, Google Analytic’s servers will start collecting data about users visiting our site. Let’s explore this mechanism briefly:

Google uses three sources to obtain information about visitors to our sites:

- HTTP requests: A common HTTP request from a visitor’s browser typically contains information about the type of browser, referrer (which site the users coming from) and also language

- Browser/system information: The Document Object Model (DOM) is a format of organizing pages via a tree structure that was developed and standardized by the World Wide Web Consortium (W3C) and supported by most browsers. Using DOM, Google’s servers are able to extract detailed browser information such as flash and java support, screen resolution and keyboard support.

- Cookies: Google uses First Party Cookies which contain small amounts of information about the individual user’s current session on a website and can be passed from one page to another as the user browses a website.

The tracking code works according to the following steps: (Taken from Google Analytic’s site for developers)

- A browser requests a web page that contains the tracking code.

- A JavaScript Array named

_gaq is created and tracking commands are pushed onto the array.

- A

<script> element is created and enabled for asynchronous loading (loading in the background).

- The

ga.js tracking code is fetched, with the appropriate protocol automatically detected. Once the code is fetched and loaded, the commands on the_gaq array are executed and the array is transformed into a tracking object. Subsequent tracking calls are made directly to Google Analytics.

- Loads the script element to the DOM.

- After the tracking code collects data, the GIF request is sent to the Analytics database for logging and post-processing.

In a nutshell, the JavaScript that is pasted into the HTML pages on your website instructs the visitor’s browser to connect to Go0gle Analytic’s servers and download some additional code. The code is stored in the browser’s DOM tree and continues to push information to Google’s Analytics server whenever a user performs an action on their browser while viewing your site.

A variety of actions can be tracked by visitors and I’ve included a list below to give you an idea of what’s possible:

- Loading a page

- An Event such as

- Playing a video

- Downloading a file

- Clicking on a button

- Hovering the mouse on the screen

- An e-commerce transaction on your website

- Adding an item to a shopping cart

- Transaction details such as Transaction ID

- Customized parameters

- A member logs in with special privileges

By collecting each of these events, we can start to understand fairly accurately what a user does on each page visit. For example, a user loads the website from their Android powered phone at 4pm on a tuesday afternoon, while sitting in a busy commercial part of the city. After scrolling down the main page for 2 minutes, she searches for a particular gym that she visited 2 months ago for available classes that day. She quickly finds a class and signs up online, paying for the class using her stored account information.

Making Sense of the data

So what do we do with this information? Well, for an individual user, not much really, as Web Analytics packages do not present personal information about users visiting the site. On the other hand, if we have hundreds or thousands of users connecting to a site over time, we can start analyzing the data to identify trends that may be useful to the business. For example, if we’re seeing an upward trend in the number of visitors searching for a particular product or service in a given area, we might be able to find similar products and services to cater to that demand, or in the case of Swing’s management, this might mean advising facilities owners in that region to offer more of a type of product or service. No wonder, over 30 million websites on the Internet use some form of Web Analytics.

Conclusion:

In this article, we went over a simple implementation of a Web Analytics tool and also explored the basic mechanics of Google Analytics. In future posts in this series, we will look into performing some customization and also collecting best practices and recommendations for users interested in implementing a Web Analytics tool.

References:

http://antezeta.com/news/what-google-knows-about-google

http://searchenginewatch.com/article/2239469/How-to-Use-Google-Analytics-Advanced-Segments

http://techcrunch.com/2013/01/28/google-makes-using-analytics-easier-with-new-solution-gallery-for-dashboards-segments-custom-reports/

http://analytics.blogspot.co.uk/

http://blog.kissmetrics.com/50-resources-for-getting-the-most-out-of-google-analytics/

http://www.grokdotcom.com/2009/02/16/the-missing-google-analytics-manual/